Equations

equations.RmdGeneral

and are two samples with mean and and standard deviation and . In case of paired samples, and are the two vectors (e.g., pre and post).

T-Test

Two-sample t-test

Assuming equal variances

The critical is calculated as:

The critical is calculated finding the quantile of the distribution with degrees of freedom.

The critical (effect size) can be calculated as:

The standard error of need to be calculated using one of the available equations.

Some useful conversions:

Finding the pooled standard deviation from the standard error and sample sizes:

Example converting to and the critical value:

n <- 100

x <- rnorm(n, 0.5, 1)

y <- rnorm(n, 0, 1)

fit <- t.test(x, y, var.equal = TRUE)

d <- unname(fit$statistic) * sqrt(1/n + 1/n)

c(d, effectsize::cohens_d(x, y)$Cohens_d)

## [1] 0.4414722 0.4414722

tc <- qt(0.05/2, n - 1)

abs(tc * fit$stderr) / effectsize::sd_pooled(x, y)

## [1] 0.2806107

abs(tc * sqrt(1/n + 1/n))

## [1] 0.2806107One-sample t-test

The critical value is calculated as usual. The degrees of freedom are and the effect size is:

Paired-sample t-test

Again the critical value is calculated as usual. The degrees of freedom are . The effect size can be calculated in several ways. The is the calculated dividing by the standard deviation of the differences:

Another version of the effect size is calculated in the same way as the two-sample version thus dividing by the pooled standard deviation:

We can convert between the two formulations:

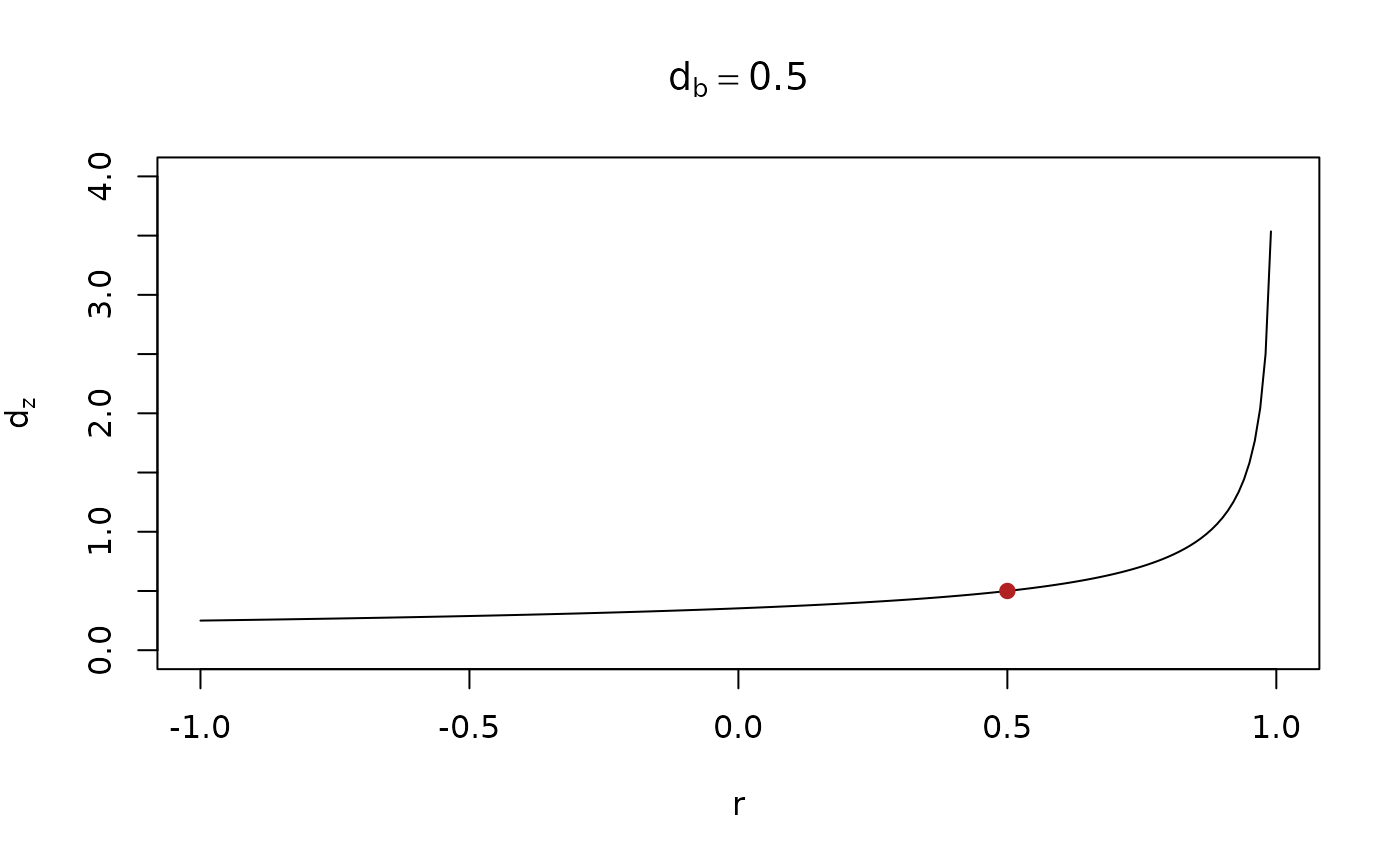

The Figure below depicts the relationship between the effect size calculated using the two methods.

Clearly the critical value will depends also on which effect size we use.

Correlation test

The cor.test implements the test statistics based on the

Student

distribution.

The critical can be calculated using:

The same can be done using the Fisher transformation that basically remove from the calculation of the standard error:

Then the test statistics follow a standard normal distribution:

The critical value is:

In R:

gen_sigma <- function(p, r){

r + diag(1 - r, p)

}

alpha <- 0.05

n <- 30

r <- 0.5

X <- MASS::mvrnorm(n, c(0, 0), Sigma = gen_sigma(2, r), empirical = TRUE)

cor.test(X[, 1], X[, 2])

##

## Pearson's product-moment correlation

##

## data: X[, 1] and X[, 2]

## t = 3.0551, df = 28, p-value = 0.0049

## alternative hypothesis: true correlation is not equal to 0

## 95 percent confidence interval:

## 0.1704314 0.7289586

## sample estimates:

## cor

## 0.5

se_r <- sqrt((1 - r^2)/(n - 2))

r / se_r # should be the same t as cor.test

## [1] 3.05505

tc <- qt(alpha/2, n - 1) # critical t

(r_c <- abs(tc/sqrt(n - 2 + tc^2))) # critical correlation

## [1] 0.3605197

pt(r_c / sqrt((1 - r_c^2)/(n - 2)), n - 1, lower.tail = FALSE) * 2 # should be alpha

## [1] 0.05Now using the Fisher’s approach: